Such recognised and respected thinkers as Nassim Taleb (author of “The Black Swan”, “Antifragility” and several other bestsellers addressing the subject of futures estimates) and Philip Tetlock (author of “Superforecasters: The Art and Science of Prediction”) represent mostly opposing views on controversial issues involved in the subject of forecasting.

Such recognised and respected thinkers as Nassim Taleb (author of “The Black Swan”, “Antifragility” and several other bestsellers addressing the subject of futures estimates) and Philip Tetlock (author of “Superforecasters: The Art and Science of Prediction”) represent mostly opposing views on controversial issues involved in the subject of forecasting.

It makes it particularly impressing to find Taleb and Tetlock agree on the need to find a way of rating the accuracy of analytic estimates and making analysts accountable for it. Taleb limits his proposal to a passionate plea for “making analysts put skin in the game”. Tetlock’s suggestion is less emotional and focusses on the use of Brier score to assign accuracy ratings to each analyst.

One issue lying at the deeper core of this debate here is whether futures are predictable at all. Taleb decisively postulates that futures are random, and randomness cannot be predicted. Tetlock’s view straddles the opposite end of the continuum. Based on the experience of the Good Judgment Project[i], he argues that while limits on predictability certainly do exist that does not mean that ALL predicting is an exercise in futility. At the very least certain types of futures can be reliably forecast. Tetlock accepts, though, that the accuracy of expert predictions beyond the five-year horizon “declines towards chance”. That would be a pretty generous admission. In order not to fall completely off the board estimates typically stand to be corrected after six months (at most).

As a start, a historic probability base line can be found nowadays for almost everything. Using this outside number as a benchmark converts a ballpark guess into an estimate. But the method based on using historic figures for grounding the initial estimate lands it on some shaky ground. In foreign affairs in particular, a median probability of many a specific outcome (say, propensity towards use of force) has been fairly capricious over the span of the past five decades or so. Counterfactual thinking involves reasoning about how something might have turned out differently than it actually did. At first glance that might look like a sterile debate devoid of a useful purpose. What has happened is an accomplished fact, right? Right, but the past was a random outcome – as random as our future will be.

Accepting infinite plurality of possible pasts helps come to grips with the similarly infinite plurality of possible futures – radical indeterminacy – so that we can prepare and act accordingly.

Accepting infinite plurality of possible pasts helps come to grips with the similarly infinite plurality of possible futures – radical indeterminacy – so that we can prepare and act accordingly.

Among several core threads Tetlock backs continuous monitoring and frequent updating of forecasts. He argues that an updated forecast is likely to be a better-informed forecast and therefore a more accurate one. There is a number of caveats regarding this line of argumentation that are worth exploring.

For one thing, mind-sets filter new information and reject that which contradicts the initial hypothesis. Periodic exposure to new information offers no guarantee that it will in fact be used to update a forecast rather than to seal in an initial one even deeper.

For another, most sources display an absolutely horrendous information-versus-noise ratio. Exposure to more noise is hardly likely to result in improved forecast accuracy. In essence, the issue at hand here is how to detect and monitor development of a particular forecast/scenario amongst many against the backdrop of ambient noise that eclipses potentially useful signals.

Learning to forecast requires practicing. Reading books is a good start. However, it is no substitute for the hands-on experience. There is a caveat, though: when fiddling with thinking and reasoning skills, only informed practice will improve those. To learn from failure one must first know when one has failed. This is the somewhat evident prerequisite for succeeding to understand why one has failed, correcting and trying again. This requirement of the method grows into an even bigger complication when dealing with estimates related to foreign policy analysis or evolution of strategic threats.

Extracting meaningful feedback regarding accuracy of vague forecasts that have due dates far ahead in time is a formidable task. Even more so, when vagueness and resulting elasticity of estimates become intentional. Besides, at evaluation time hindsight bias kicks in to distort the precise parameters of the past state of one’s un-knowledge about the future. That makes it impossible to pass a judgement on the ultimate accuracy of a forecast – or its utility. Probability estimates can be reasonably true when dealing with deterministic problems. But such problems are relatively rare in real life in general and in international policy analysis, in particular. However, where there truly is a will, a way can be opened. Once again, the simple but powerful structured technique of problem decomposition can raise the accuracy of predictive analysis.

If the issue at hand is too big or too complex, its components may well turn out to be wieldier. By means of separating the knowable and the unknowable parts and doing a key assumptions check remarkably good probability estimates can arise from remarkably crude iterations of guesstimates. Looks simple, but only at the first glance.

When using the technique of problem decomposition a key analytic task becomes to figure out:

- what we know we know,

- what we don’t know we know,

- what we know we don’t know and, above all,

- what we don’t know we don’t know.

One has to reckon with the inevitable circumstance that a plethora of cognitive biases will dull the thinking ability required to accomplish these feats of reasoning. All individuals assimilate and evaluate information through the medium of mental models or mind-sets. These are experience-based constructs of assumptions and expectations. Such constructs strongly influence what incoming information an analyst will accept and what discard. A mind-set is the mother of all biases. Being an aggregate of countless biases and beliefs, a mind-set represents a giant shortcut of the mind. Mind-sets are immensely powerful mechanisms with an extraordinary capability to distort our perception of reality. The key risks presented by mind-sets include[ii]:

- MIND-SETS MAKE ANALYSTS PERCEIVE WHAT THEY EXPECT TO PERCEIVE. Events consistent with prevailing expectations are perceived and processed easily. Events that contradict these tend to be ignored or distorted in perception. This tendency of people to perceive what they expect to perceive is more important than any tendency to perceive what they want to perceive.

- MIND-SETS TEND TO BE QUICK TO FORM BUT RESISTANT TO CHANGE. Once an analyst has developed a mind-set concerning the phenomenon being observed, expectations that influenced the formation of the mind-set will condition perceptions of future information about this phenomenon.

- NEW INFORMATION IS ASSIMILATED INTO EXISTING MENTAL MODELS. Gradual, evolutionary change often goes unnoticed. Once events have been perceived one way, there is a natural resistance to other perspectives.

- INITIAL REACTION TO BLURRED OR AMBIGUOUS INFORMATION RESISTS CORRECTION EVEN WHEN BETTER INFORMATION BECOMES AVAILABLE. Humans are quick to form some sort of tentative hypothesis about what they see. The longer they are exposed to this blurred image, the greater confidence they develop in this initial impression. Needless to say that it is oftentimes erroneous.

A mindset will distort information and lead to misperception of intentions and events. Such distortions can result in miscalculated estimates, inaccurate inferences, and erroneous judgments.

Structured analytic techniques are specifically intended to foster collaboration and teamwork. Team estimates and judgments reflect a consensus among team members, which blunts and dilutes the intensity of individual biases. Group work is the best – if not the only – known remedy against cognitive biases. On top of that working in a team offers one other advantage. A consensus judgment of a group consistently trumps the accuracy of an average group member.

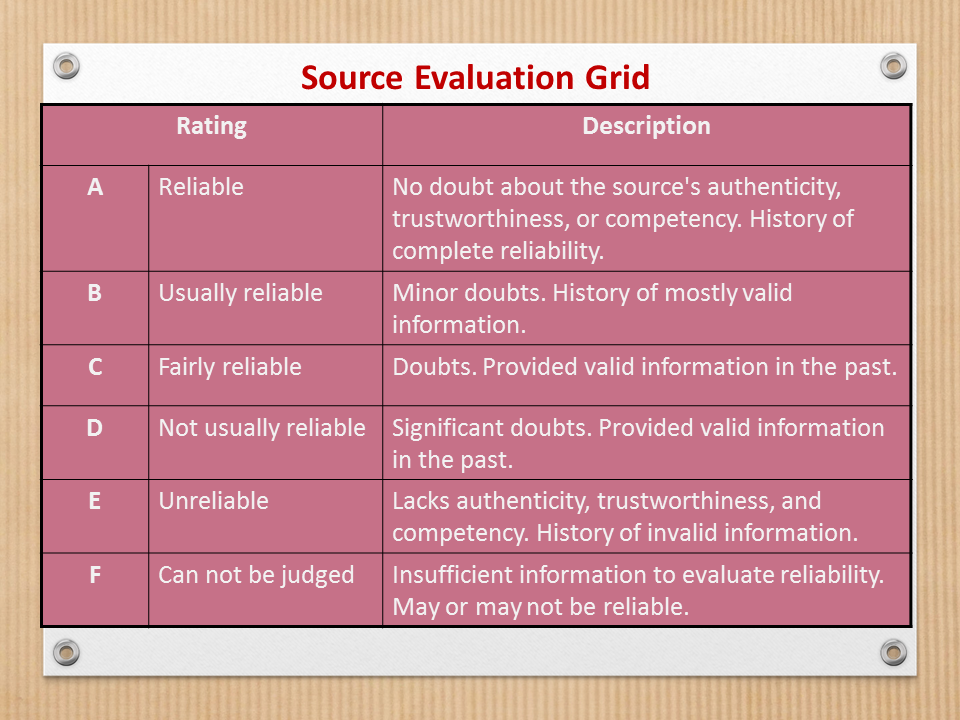

One caveat here is that it is true only when solving riddles, i.e. those analytic problems that have a single correct answer. A second caveat is that working in a group presents certain challenges of its own. A central one is evaluation of incoming information. In a typical set up, different team members will be responsible for collecting information from different sources. These discrete data sets would then be collated into a data base to which all team members have access. When accessing information obtained by others, team members will have no way of judging its accuracy or reliability of the source from which it has been obtained. Inferences, estimates and judgements based on potentially inaccurate or unreliable information will inevitably turn out to be also potentially inaccurate and unreliable.

Such risks are to some extent mitigated by the mandatory use of uniform grids that separately evaluate accuracy of the information and reliability of the source. Each piece of incoming information becomes thus graded. Every analyst granted access to a piece of information sees specific grading codes that assist in forming a judgement regarding how this information can be used.

Such recognised and respected thinkers as Nassim Taleb (author of “The Black Swan”, “Antifragility” and several other bestsellers addressing the subject of futures estimates) and Philip Tetlock (author of “Superforecasters: The Art and Science of Prediction”) represent mostly opposing views on controversial issues involved in the subject of forecasting.

Such recognised and respected thinkers as Nassim Taleb (author of “The Black Swan”, “Antifragility” and several other bestsellers addressing the subject of futures estimates) and Philip Tetlock (author of “Superforecasters: The Art and Science of Prediction”) represent mostly opposing views on controversial issues involved in the subject of forecasting. Accepting infinite plurality of possible pasts helps come to grips with the similarly infinite plurality of possible futures – radical indeterminacy – so that we can prepare and act accordingly.

Accepting infinite plurality of possible pasts helps come to grips with the similarly infinite plurality of possible futures – radical indeterminacy – so that we can prepare and act accordingly.